Machine Learning Projects

Exploring the intersection of data science, artificial intelligence, and real-world applications

GlimzAI - AI-Powered News Summarization Platform

Live Project Designed and deployed a full-stack web application that ingests daily news and provides AI-driven summaries across multiple domains (politics, technology, business, science, etc.). The platform improves information accessibility for users by leveraging large language models for intelligent text summarization.

Built backend using FastAPI + AWS Lambda + RDS, with React frontend hosted on AWS S3 + CloudFront. Implemented CI/CD and containerized services with Docker for scalable cloud deployment. The system automatically processes news feeds and generates concise, informative summaries using state-of-the-art NLP models.

The platform demonstrates expertise in full-stack development, cloud architecture, and AI integration, showcasing the ability to build production-ready applications that bridge machine learning research with real-world user needs.

_Sensor31.png)

Signal Anomaly Detection: An LSTM Approach

In this project, I designed an innovative architecture that leverages a fusion of LSTM and CNN layers. The input dataset consists of multiple channels, encompassing signal data, signal variation, and a channel dedicated to frequency characteristics.

While the model was initially trained on normal signals without any anomalies, its predictive ability diverges significantly when confronted with signals exhibiting anomalous behavior. To identify these anomalous segments within the signal, I employed the Kullback-Leibler divergence, comparing the distribution of the prediction-error with that of a standard normal distribution.

A Semantic Segmentation Solution for Oil and Gas Pipeline Component Detection

I have developed a Semantic Segmentation model that represents the weighted average of four different models. I used this model to detect the components of a pipeline based on Magnetic Flux Leakage (MFL) data. To the best of my knowledge, this marks the first instance where Semantic Segmentation has been applied for this specific purpose.

This ensemble model comprises four distinct components, each built using a unique combination of architectures: FPN and Unet, alongside encoders resnext and densenet. Impressively, the final model exhibited outstanding performance, boasting a remarkable 90% recall rate and a solid 77% precision rate in detecting components. This work has been accepted to ICMLA 2023.

Pipeline Defect Characterization Using a Boosting Model

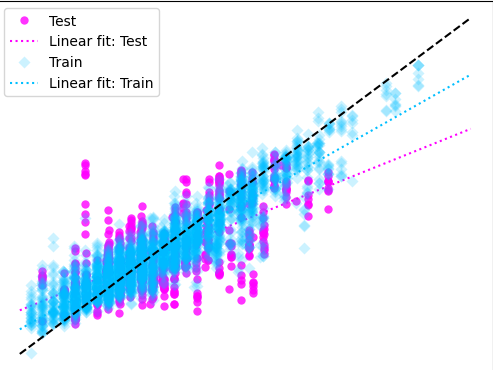

I have designed a LightGBM model to forecast the dimensions of defects, specifically their geometric properties, based on non-destructive measurement signals. The extracted features encompass a combination of signal characteristics at the defect's location and the measurement conditions.

To enhance the model's performance, I employed Bayesian Optimization via Optuna and utilized the Tree-structured Parzen Estimator (TPE) algorithm for hyperparameter tuning. The outcome of the final model showed a significant improvement in the R2-score, MAE and MSE when compared to the previous model.

A Boosting Model to Predict Material Properties

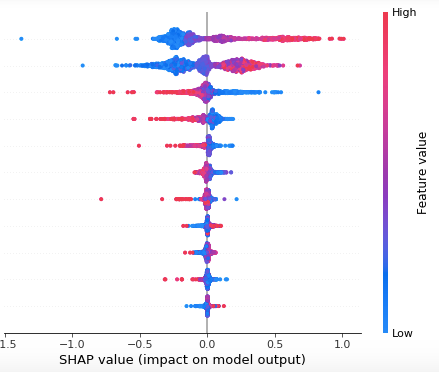

As a Postdoctoral Researcher, I spearheaded a team of Ph.D. students to meticulously extract Alloy preparation properties, followed by the creation of over 3000 features derived from Quantum Simulations. Subsequently, I employed multiple Boosting models to forecast four distinct temperatures crucial to understanding a significant alloy property.

The standout performer among these models was a CatBoost model, which not only achieved an impressive average R2 score of 94% in predicting these properties but also demonstrated its ability to accurately predict the four target temperatures for alloys containing elements not present in the training dataset.

Furthermore, I harnessed the power of feature importance analysis, utilizing the Shapley value method to construct a revealing graph. This graph provided invaluable insights into the Quantum properties with the greatest impact on the four target temperatures.

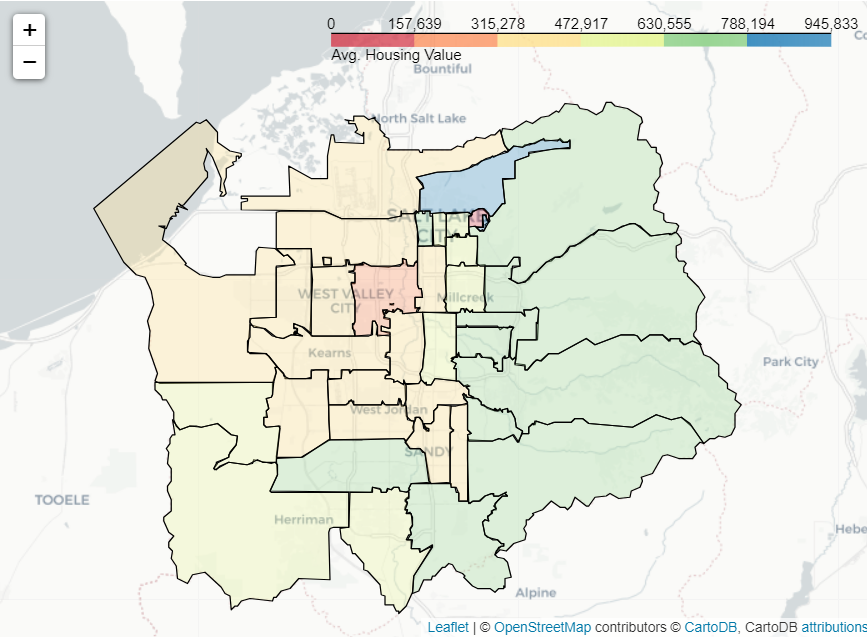

Salt Lake County Housing Insights: Market and Homeowner-Sentiment Analysis

Nerd Realtor In this project, I conducted data scraping to gather demographic information and housing prices for Salt Lake County, organized by zip code. Furthermore, I extracted more than two hundred homeowner postings for each zip code from a neighborhood website.

Employing TextBlob and NLTK, I performed sentiment analysis on these posts, ultimately generating a happiness index for each zip code. To visualize this data, I leveraged Folium's heatmap representation within a web application developed using Streamlit.

Users can select specific demographic, market, and happiness indices, and the resulting overall index is presented in the form of a Folium heatmap. This approach offers an efficient means of conveying a substantial amount of information at a glance.